k8s部署流程

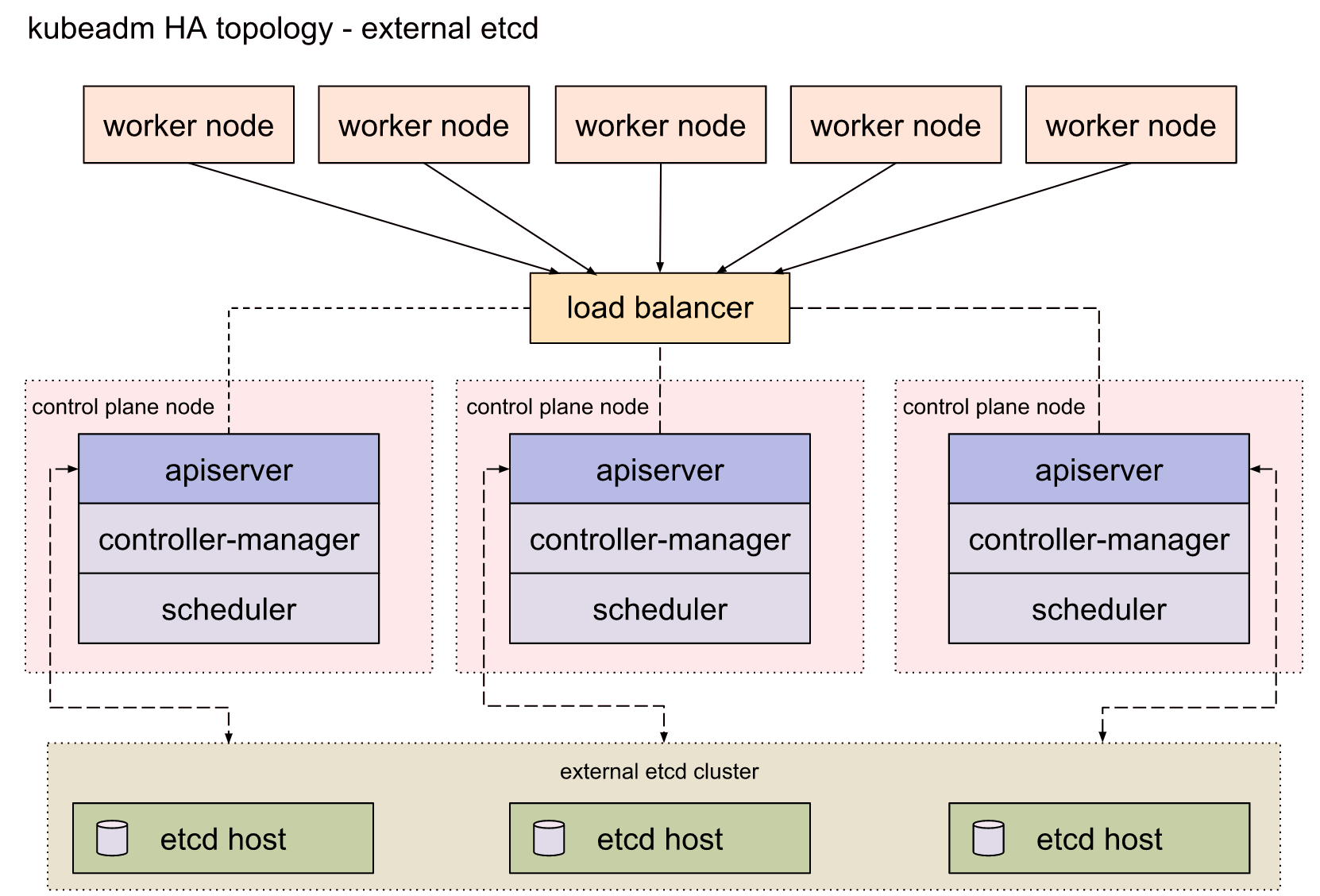

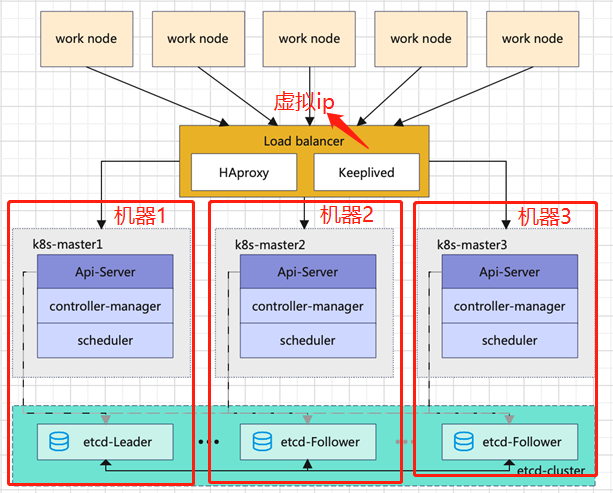

k8s部署流程 本文使用的k8s高可用架构如下,为多master和独立的外部etcd集群方案

一、服务器资源初始化

IP

主机名

角色

192.168.32.120

无

VIP(虚拟ip,在3台master机子上切换)

192.168.32.129

k8s-master-1

k8s-master、etcd、keepalived

192.168.32.131

k8s-master-2

k8s-master、etcd、keepalived

192.168.32.132

k8s-master-3

k8s-master、etcd、keepalived

192.168.32.130

k8s-node-1

k8s-node

版本

系统:ubuntu18.04 64位

kubectl:v1.23.0

kubelet:v1.23.0

kubeadm:v1.23.0

其余组件版本最新

修改hostname

重启系统

关闭 swap 内存

这个swap其实可以类比成 windows 上的虚拟内存,它可以让服务器在内存吃满的情况下可以保持低效运行,而不是直接卡死。但是 k8s 的较新版本都要求关闭swap。

1 sed -ri 's/.*swap.*/#&/' /etc/fstab # 永久关闭

确定当前没有开启 Swap

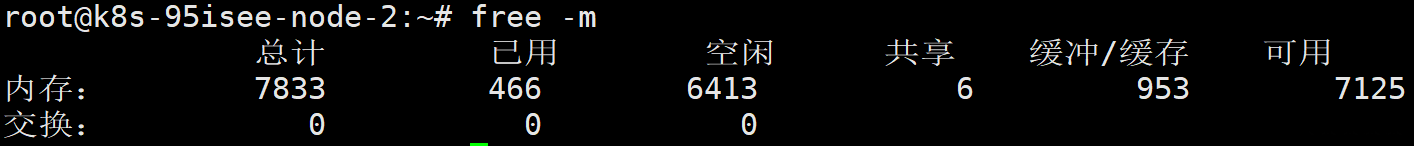

在终端使用命令 free -m 查看输出结果

可以看到最后一行是0 0 0,则表示当前没有开启。

开启必要端口

二、安装docker 1.安装docker 1 sudo apt install docker.io

2.docker的配置 安装完成之后需要进行一些配置,包括切换docker下载源为国内镜像站 以及 修改cgroups。

这个cgroups是啥呢,你可以把它理解成一个进程隔离工具,docker就是用它来实现容器的隔离的。docker 默认使用的是cgroupfs,而 k8s 也用到了一个进程隔离工具systemd,如果使用两个隔离组的话可能会引起异常,所以我们要把 docker 的也改成systemd。

这两者都是在/etc/docker/daemon.json里修改的,所以我们一起配置了就好了,首先执行下述命令编辑daemon.json:

1 sudo vim /etc/docker/daemon.json

打开后输入以下内容:

1 2 3 4 5 6 7 {

然后:wq保存后重启 docker:

1 2 3 sudo systemctl daemon-reload

然后就可以通过docker info | grep Cgroup来查看修改后的 docker cgroup 状态,发现变为systemd即为修改成功。

1 docker info | grep Cgroup

三、安装 etcd 集群 1、在 k8s-master-1 生成证书所需要文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 cat > ca-csr.json <<EOF

2、这里的证书有效期时间加长点,87600h = 10年。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 cat > ca-config.json <<EOF

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 cat > etcd-csr.json <<EOF

3、生成证书,并发送到各master节点

1 2 3 4 5 6 7 8 9 cfssl gencert -initca ca-csr.json | cfssljson -bare ca

4、将安装etcd,并发送到各master节点

通过https://github.com/etcd-io/etcd/releases/download/v3.5.7/etcd-v3.5.7-linux-amd64.tar.gz 手动下载安装包

1 2 3 4 5 6 cd <packages folder>

5、在各节点生成etcd配置文件,!!! 注意修改配置文件ip和 –name= 后的节点名称,而且别落下空格

在 k8s-master-1 执行

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 cat > /etc/systemd/system/etcd.service <<EOF

在 k8s-master-2 执行

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 cat > /etc/systemd/system/etcd.service <<EOF

在 k8s-master-3 执行

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 cat > /etc/systemd/system/etcd.service <<EOF

6、启动 etcd 服务

1 2 3 4 mkdir -p /var/lib/etcd

如遇到无法启动成功,请先查看端口占用情况,

如遇卡住一阵子报错超时说明单一etcd节点无法启动,etcd服务其实已经成功启动,第二台节点再启动etcd就不会卡住报错。

如果立刻报错退出,请检查etcd.service文件配置是否正确,包括格式,空格,ip地址,name。

如遇到提示

1 2 3 4 [root@k8s-master01 ~]# systemctl enable --now etcd

可以先通过journalctl -xe查看错误信息,遇到类似以下信息。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 [root@k8s-master01 ~]# systemctl status etcd

执行netstat -anptu |grep 2380和netstat -anptu |grep 2379查看端口占用

kill -9 进程id

遇到其他错误请检查配置文件,ip地址是否有误

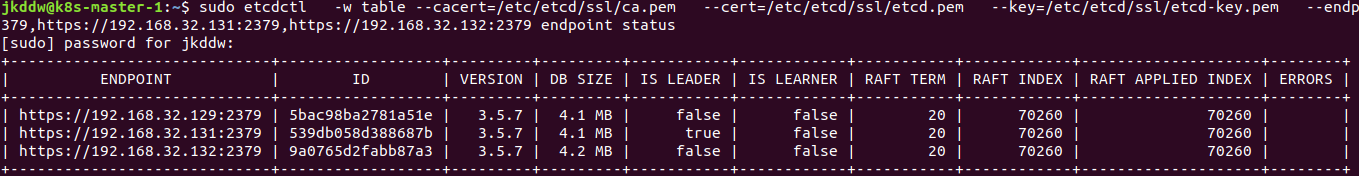

7、检查etcd各节点是否正常,三台主机的服务启动完毕,执行

1 2 3 4 5 etcdctl \

返回如下说明正常运行,有leader和非leader,etcd已经形成一个集群

四、安装 keepalived 1、分别在三台master服务器上安装keepalived

1 sudo apt-get install keepalived

2、在各 master 节点生成配置文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 cat > /etc/keepalived/keepalived.conf << EOF

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 cat > /etc/keepalived/keepalived.conf << EOF

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 cat > /etc/keepalived/keepalived.conf << EOF

注意:

1 2 3 4 keepalive需要配置BACKUP,而且是非抢占模式nopreempt,假设master1宕机,

启动顺序master1->master2->master3,在master1、master2、master3依次执行如下命令

1 2 3 systemctl enable keepalived

keepalived启动成功之后,在master1上通过ip addr可以看到vip 192.168.32.120已经绑定到ens33这个网卡上了

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

五. 安装 k8s kubelet: k8s 的核心服务

kubeadm: 这个是用于快速安装 k8s 的一个集成工具,我们在master1和node1上的 k8s 部署都将使用它来完成。

kubectl: k8s 的命令行工具,部署完成之后后续的操作都要用它来执行

在全部3个master和node节点安装这些组件。

其实这三个的下载很简单,直接用apt-get就好了,但是因为某些原因,它们的下载地址不存在了。所以我们需要用国内的镜像站来下载,也很简单,依次执行下面五条命令即可:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 # 使得 apt 支持 ssl 传输

六、安装 kubernetes 集群 master 节点 1、创建 kubeadm-conf.yaml 和 kube-flannel.yml 配置文件,注意修改以下配置

★ 修改certSANs的 ip 和 对应的 master主机名,master主机名不要遗漏,否则init会dns报错

★ kubernetesVersion: 改成对应的版本号

★ networking 选项不要遗漏

kube-flannel.yml 通过https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml 下载,无法访问留学下载后手动上传至服务器

kubeadm-conf.yaml配置文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 apiVersion: kubeadm.k8s.io/v1beta2

2、使用 kubeadm 创建 k8s 集群

1 kubeadm init --config kubeadm-conf.yaml

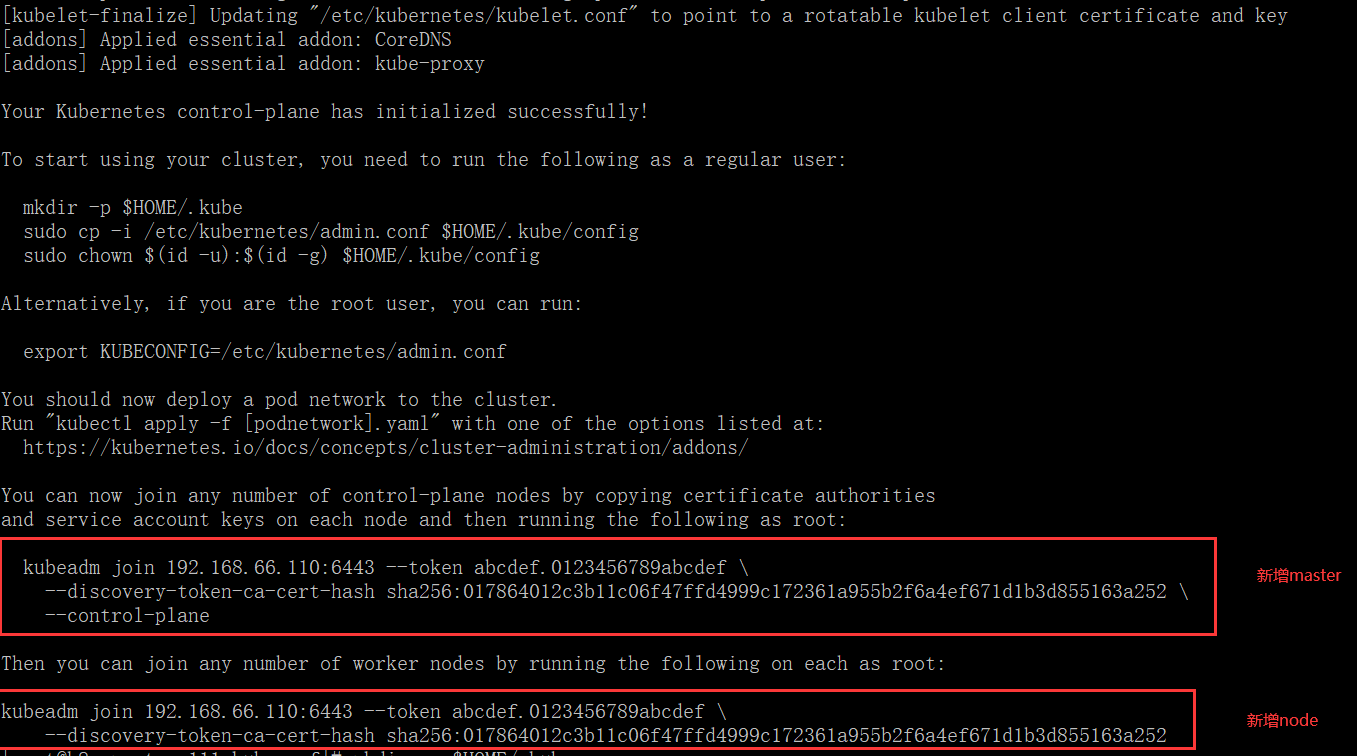

成功的话如图所示,请保存新增master和新增node的命令

如果你不慎遗失了该命令,可以在master节点上重新生成新的token

1 kubeadm token create --print-join-command

3、配置kubectl工具

执行下面命令,会出现错误。

1 The connection to the server localhost:8080 was refused - did you specify the right host or port?

因为需要预先配置(master-1节点执行)。

1 2 3 mkdir -p $HOME/.kube

此时可以查看一些内容

1 2 3 sudo kubectl get nodes

其他节点(包括work node节点 )请copy master-1的/etc/kubernetes/admin.conf ,非本机的admin.conf,再次执行上述命令

4、安装flannel网络,之后可以看到master节点已经ready(每个master节点都必须安装flannel网络)

1 kubectl apply -f kube-flannel.yml

1 2 3 sudo kubectl get nodes

5、加入其余两个 master 节点到集群中

将 kubernetes 的证书传到其余两个 master 上

1 2 scp -r /etc/kubernetes/pki/ k8s-master-2:/etc/kubernetes/

在其余两个节点执行加入 master 的命令,注意是带 –control-plane 的那条命令

1 kubeadm join 192.168.32.120:6443 --token vmempt.k844aidfta62xlvf --discovery-token-ca-cert-hash sha256:1748d97830db9d3ce24914efa084ce2322ebb28a8a18e146135dd5025ac79323 --control-plane

如果加入失败请检查 kubeadm-conf.yaml配置文件和flannel网络安装情况。

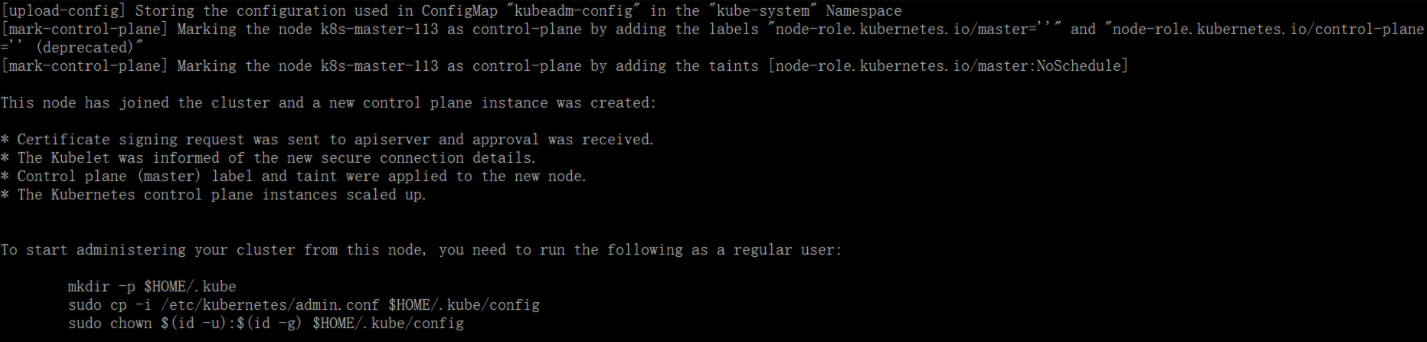

加入成功提示

再次查看node节点,等待所有的node 成为 Ready 状态

1 2 3 4 5 kubectl get nodes

七、加入 work node 到集群中 在node节点执行即可加入集群,如果错误请检查flannel网络安装情况

1 kubeadm join 192.168.32.120:6443 --token vmempt.k844aidfta62xlvf --discovery-token-ca-cert-hash sha256:1748d97830db9d3ce24914efa084ce2322ebb28a8a18e146135dd5025ac79323

查看节点

1 2 3 4 5 6 sudo kubectl get nodes

至此,3master 3etcd 1work-node的k8s集群搭建完成。

测试工作节点加入pod请查看另一篇文章。